Page 61 - Data Science Algorithms in a Week

P. 61

Naive Bayes

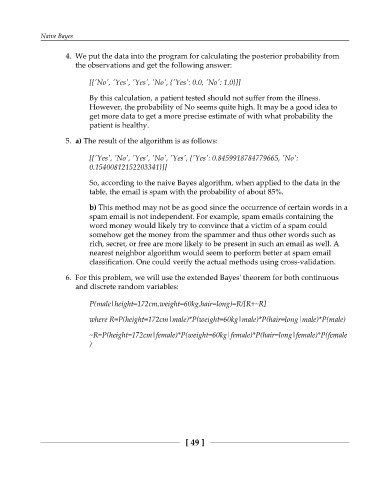

4. We put the data into the program for calculating the posterior probability from

the observations and get the following answer:

[['No', 'Yes', 'Yes', 'No', {'Yes': 0.0, 'No': 1.0}]]

By this calculation, a patient tested should not suffer from the illness.

However, the probability of No seems quite high. It may be a good idea to

get more data to get a more precise estimate of with what probability the

patient is healthy.

5. a) The result of the algorithm is as follows:

[['Yes', 'No', 'Yes', 'No', 'Yes', {'Yes': 0.8459918784779665, 'No':

0.15400812152203341}]]

So, according to the naive Bayes algorithm, when applied to the data in the

table, the email is spam with the probability of about 85%.

b) This method may not be as good since the occurrence of certain words in a

spam email is not independent. For example, spam emails containing the

word money would likely try to convince that a victim of a spam could

somehow get the money from the spammer and thus other words such as

rich, secret, or free are more likely to be present in such an email as well. A

nearest neighbor algorithm would seem to perform better at spam email

classification. One could verify the actual methods using cross-validation.

6. For this problem, we will use the extended Bayes' theorem for both continuous

and discrete random variables:

P(male|height=172cm,weight=60kg,hair=long)=R/[R+~R]

where R=P(height=172cm|male)*P(weight=60kg|male)*P(hair=long|male)*P(male)

~R=P(height=172cm|female)*P(weight=60kg|female)*P(hair=long|female)*P(female

)

[ 49 ]